Wednesday, 14 July 2010

Here is something I've been asked more than once, and seen asked on various forums as well. I'll paraphrase rather than quote one specific asker: We have an application written 15 years ago that's been working flawlessly. But when we run it on Windows 7, the users can't find the files it writes. Worse, there are no error messages, so they think they've saved the files, but when they go to C:\Program Files\MyGreatSoftware\UserExports - the files aren't there!

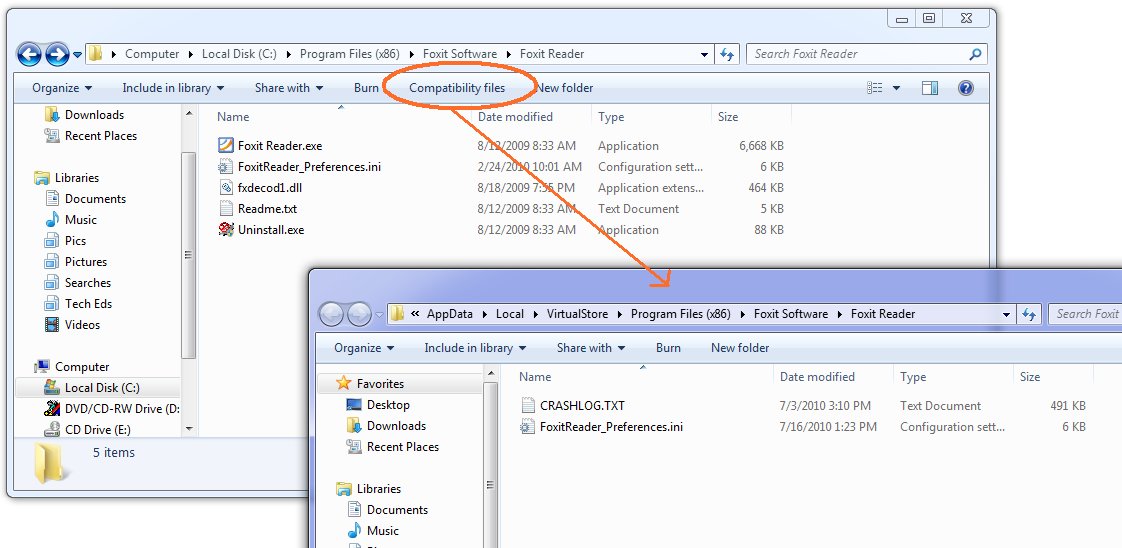

Often, the question trails off into a rant about how sneaky and mean Windows 7 is to somehow prevent access to Program Files but not give error messages. The rant might also include a paean to how amazing the lost files were and how many workyears of productivity have been lost now that these files cannot be found, and why this means you can't trust Windows to do something as simple as write a file to the hard drive. Sometimes, the asker has established that this is related to UAC and they are recommending everyone turn it off to avoid this disaster. I thought I would make some less drastic suggestions. First, your files are not lost. A few people know this, but they then claim the files are almost impossible to find and no end user will ever find them. Let's tackle this one first because if you know this trick you may be able to get by without changing anything else about your application. Tell the user to go to the place they expect to find the files, say C:\Program Files\MyGreatSoftware\UserExports. Then have them look in the toolbar for a button that says Compatibility Files. Click it. Ta-da!

Ok, now the next thing is, why the heck are your files being written there? Because you are trying to write to a protected area and you don't have a manifest. You have several things you can do about this, and they boil down to two main things: One, don't write to a protected area. You can get this by installing somewhere other than Program Files (not a good idea) or by changing the application to write to a better place. Two, get permission to write to the protected area. This means running as administrator. Train the users to right-click Run As Administrator when they run the app, or train them to set the Compatibility Settings for the app (neither very likely) or ship the application with a manifest that includes requireAdministrator. Now matter how you arrange this second thing, your users are not going to like agreeing to the UAC prompt every darn time. So really, that brings you back to number one, don't write to a protected area. Use AppData instead - there's a simple function call to get that path on any machine (including older XP machines) and you'll be in fine shape. If you think your users can't find that, and the files are for the users and not just some internal settings, then use a folder under Documents - again, there's a simple function call that will get you the path.

If virtualization makes you nuts - that your code thinks it's writing to C:\Program Files\whatever but really it's writing somewhere else, and the OS is cheerfully lying to it and saying all the writes succeeded - then put a manifest on your app. Doesn't matter whether it's requireAdministrator or asInvoker. Doesn't matter whether it's embedded (VS will embed them for you from 2008 on easily, and there are tools that do just manifest adding) or just a file of XML in the same folder as the exe. Once the app has a manifest, virtualization stops. Of course this may mean the users get all kinds of Access Denied errors that they don't like. Now you see why virtualization was invented. Should you rely on it? No. For one thing, it may go away in some future version of Windows. And it goes away when you add a manifest, which for many people happened when they migrated to a new version of Visual Studio. What you should do is understand it, including how to find the virtual store, so it doesn't make you quite so crazy. Now go turn UAC back on, Kate

Monday, 12 July 2010

There are two services I use not just every day, but many many times a day. One is email and the other is Twitter. Facebook and StackOverflow also get their share of attention, but one thing that sets Twitter apart from Facebook and StackOverflow is the proliferation of clients you can use to access it. You can go to the web page, and do it all in a browser, or you can get any of the many clients available to give you a richer experience. The same is true for email - I can use Outlook or I can use OWA and do it all in a browser. Recently I found myself facing a full week away from home and the office and with no way to get a VPN although I had great internet access. I could listen to CBC radio and watch Canadian TV but I could not bring my email in Outlook. The first day was ok, but not great. I found myself wanting to email people, and I had to open Outlook to poke around and get their email addresses, then paste them into the OWA new message. It was so different from the usual fast-as-thought process of typing the first letter or two of their names and pressing tab. I also had to delete my own spam, because I don't like server-based spam filters and have been really happy with my client-side spam settings in Outlook. The little preview windows weren't as informative as I wanted, my old appointments weren't showing up, there was no to-do bar, and deleting messages or waiting for the new window when I replied to messages just took too darn long. By day 3 I was about insane. Finally my favourite sysadmin (who I was smart enough to marry almost 30 years ago) got Outlook-over-http working for me and I could go back to normal. I was utterly astonished at the effect on my mood that not having my client application had on me, and the effect of getting it back. It was very distinct and unmissable. The browser solution just wasn't good enough for me - and OWA is an amazing feat of engineering, with a way richer UI (delete key works, F keys work, etc) than most browser-based solutions. It got me thinking, once my cheerful mood had let me catch up on some outstanding work, about client apps in general. Why do I only use Twitter in a browser? I've tried a whole number of clients but I always end up back in the browser. I think it's because clients have to be well-designed to work well. If they hog resources, jump in your face too much with focus stealing or balloon tips, or insist on being sized a certain way then they don't get a chance to show you their good side. Twitter is pretty young and I don't think we've really had time to winnow the good client features the way we have with email. With that in mind, and believing a good client really will be a better experience, I've decided to try MetroTwit. I've heard really good things about it and I honestly believe that client apps make more sense for these sorts of information feeds. So far, I like it. I get toast for new tweets, a new tweet counter as a taskbar overlay icon, and such a delicate consumption of my CPU and disk activity that I can't tell if it's running or not. You might also be interested to hear why the developers chose WPF, and what that led to for the team: over just a couple of months, what we’ve achieved with MetroTwit was simply not possible without

WPF considering the few precious midnight hours we put into it on most days.

According to the rest of the team (the real developers), apparently I owe much

to data-binding which I’ve been told is nothing short of a miracle.

If you have a choice of using a browser or using a client app, which do you choose? Is it always the same or does it vary with the business purpose you use it for? While we don't represent our users exactly, we can still learn from our own personal choices and our emotional reactions to software. Kate

Saturday, 10 July 2010

I'm not sure when this started, but DevX has a whole area for Visual Studio 2010 articles. They've got handy links to download a trial and a training kit, walkthroughs of creating extensions (a simple blogging one, and adding your own language to the IDE), and lots more. It's a combination of articles, webcasts, and downloads that cover not just Visual Studio but some of the things you can create with it and what's new in related tools. Of course I've seen some of the material before, but that just shows that it's comprehensive. Take a look around! Kate

Thursday, 08 July 2010

I'm an optimist. I'm always looking for (and usually finding) the bright side. I think this has served me very well over the years. Recently I read an interesting Fast Company article (an excerpt from a book) that described a problem solving approach based on looking for the bright side - well actually, what they call the bright spot: Our focus, in times of change, goes instinctively to the problems at hand.

What's broken and how do we fix it? This troubleshooting mind-set serves us well

-- most of the time. If you run a nuclear power plant and your diagnostics turn

up a disturbing signal once per month, you should most certainly obsess about it

and fix the problem. And if your child brings home a report card with five As

and one F, it makes sense to freak out about the F.

But in times of change, this mind-set will backfire. If we need to make major

changes, then (by definition) we don't have a near-spotless report card. A lot

of things are probably wrong. The "report card" for our diet, or our marriage,

or our business, is full of Cs and Ds and Fs. So if you ask yourself, What's

broken and how do I fix it?, you'll simply spin your wheels. You'll spend a lot

of time agonizing over issues that are TBU - true but useless.

The article gives a number of examples of not trying to find the major underlying system cause and solve it with huge missions, but instead trying to find a localized success and encouraging it to spread. Interesting concept and well worth a read. How could you apply it to that totally-messed-up project or that new hire who has turned out to be so wrong?

Kate

Tuesday, 06 July 2010

I watched a video the other day from someone whose blog I read and whose presentations and sessions I have enjoyed. I was drawn to it because it said C++ in the title, and it was a really non-C++ person. Oh my. I did manage to last about 12 minutes, until it was pretty clear there really wasn't going to be any C++ content at all. There even was a tiny bit of useful content in those 12 minutes. But it was all mixed in with joking between the hosts, including something that must have been a running inside joke, because they sure were liking it and I didn't get it, snips of music, throwaway lines about "as we all know" when I didn't know what they were talking about but it might have been interesting to explore, and actual interesting things. Plus, the two hosts disagreed a lot, which I suppose was interesting, but impeded my ability to actually learn what one of them was trying to convince me of or explain to me. I couldn't watch to the end of it. It got me thinking about the number of times I have read people blogging that they don't bother listening to podcasts. The theory goes that podcasts and videos are super quick to produce - just turn on the camera or Camtasia, plug in the mike, press record and off you go. A lot of them are not edited at all. And it shows  . There are good podcasts ( .Net Rocks comes immediately to mind, and not just because I appear on it once or twice a year) and they are the product of significant effort. There is conversation in advance about "what are we going to talk about". There is awareness of how the conversation is going, and genuine work during the conversation to keep it flowing well. And there is editing afterwards. All of this combines to make a higher quality experience for the listener, which is the point, right? You can find a zillion bad podcasts, and the good ones have one thing in common: they are motivated by the experience for the listener, not the ease or fun for the creator. I wish that wasn't so, I wish there was a magic easy way to get your knowledge out there to the community that was quicker than blogging or writing books or teaching courses or traveling to far away places and getting up on stage - but there isn't, they all take work. I have all this in mind while I'm recording some screencast/tutorial type videos. When I give an actual presentation, I probably say um and ah and you know. I hope I say it less than some folks, but I still expect I say it. I know for a fact I say it when the mike is plugged in. How do I know that? Because right after I hit Stop on the recorder, I hit Edit. And I listen to the whole thing and whenever I hear um and ah and you know, I edit it out. I also edit out the pauses and the messups. I think that's the exchange we make between in person and recorded materials. An in person presentation or session or training is spontaneous and adjustable - you can ask me a question and I can go deeper on that one thing you want to know more about. When it's recorded, you can't interact. But hey, you get a crisper and more polished presentation. You don't have to ever watch a demo fail. Products that in real life take 15 seconds to launch are already launched when the demo starts, or appear to launch in two seconds because I edited out 13 seconds of splash screen. This means that producing a ten minute video is going to take me way more than ten minutes. First, there's prep time - writing slides, creating starting point demo code, practicing a demo and ensuring that I have a good example that really covers the point I want to make, rehearsing to be sure I can do it crisply, and all of that. When I present at a conference or user group, deliver training, or even just visit a client one on one to show them something, I have to do all that prep. It's often about 3-5:1 - for a one hour talk there will be 3-5 hours of prep - and that's if you know the material cold, it doesn't count learning what's new in product X or learning how to do thing Y. Don't underestimate this effort. Folks who skip it find themselves the bad example in other people's blogs. Then there's rehearsing the whole talk a few times, which I generally don't do for recorded videos but have to do for in person material. What recorded videos need is about 12 minutes to actually record it, with pauses and ums and false starts and all, then 30 minutes or so to play that and edit out the two minutes that don't belong. I'm not complaining, mind you. I think if a job's worth doing, it's worth doing right. And for videos, that means prep beforehand and editing afterwards. Is the medium the message? Well, you can't do the exact same thing in different media (eg in person or video) and expect to deliver the exact same message. Um in person and um in your video carry different messages. Joking with the guy who introduced you at a user group and joking with the guy who introduced you at a 5000 person keynote carry different messages. McLuhan was right. Kate

Sunday, 04 July 2010

About 6 weeks ago I blogged about the technology behind the amazing Olympics experience I had here in Canada watching CTV, and my American neighbours had watching NBC, as well as the Norwegian and French coverage. Now another case study has been released from that work. This one focuses on the way the broadcasters were able to insert ads (to pay for all that glorious coverage) and build highlights packages. As it says in the case study: NBC teamed with premier technology vendors, led by Microsoft, to cover 4,485

hours of 2010 Winter Olympics events in HD via Microsoft Internet Information

Services (IIS) Smooth Streaming to a video player based on Microsoft

Silverlight. In addition to pleasing sports fans, the programming offered an

audience of more than 15.8 million unique visitors to the many advertising

partners of NBC. The exceptional capability of IIS Smooth Streaming and

Silverlight technologies gave technology vendors the tools they needed to

deliver midstream ads while providing an engaging experience for Olympics

enthusiasts.

I find it interesting that Canada, with one-tenth the population of the USA, consumed double the hours of video. Not per person mind you, but total. We were lucky enough to have a lot more to choose from. As you can see when you look at the unique visitor counts, it is mostly that we each watched a whole lot of Olympics back in the dark winter months. Definitely worth a read to see how it was done and how it will no doubt continue to be done in the future. Kate

Friday, 02 July 2010

I love demoing restart and recovery. This is the feature that will bring many users to Windows 7 - getting your work back even after the application blows up. Sure, Word saves your document every 20 minutes - but why does it seem I always lose 19 minutes of work? In my simple demo apps, there's a form with one or two controls on it, and one is some text, and when the application blows up I write all the text out, and then on restart I reload it from wherever I wrote it to. It's simple and for most applications it's exactly what you want. But for some applications that approach won't work as well. For example, what if you have an MDI application and the user has 20 or 30 documents open, each with unsaved changes, when the application is terminated? There may not be time to save all those unsaved documents. And then on restart, perhaps the user doesn't want them all restored, or at least not with their real names... it can get complicated. You are going to need to know your own application and make an intelligent decision about how to handle restart and recovery for your application. But it might help you to know how a certain MDI application near and dear to all our hearts does it ... Visual Studio. Visual Studio does a Word-like autosave every 5 minutes. When the application blows up, it doesn't do anything in particular on the way down. But when it's restarted, it takes a look at the files it has auto recover versions of and asks if you want any of them. You might, you might not, and as the user it's up to you. Zain Naboulsihas a tip-style blog post that explains how it works and how you can control it. Read that for its own sake, since you're probably a Visual Studio user and should be using it as effectively as you can. But also take the opportunity to think about a good design for restart and recovery in your application, which is probably a little more complicated than one text box and one other control. Kate

Wednesday, 30 June 2010

I'm recording some videos again (I'll announce when the project is live) and I'm doing it, as I really like to these days, in a bootable VHD. I've got the environment set up just the way I like it, without messing with my day-to-day setup, and as an extra bonus I avoid the distractions that Outlook, Instant Messenger, Skype, and the Favorites menu in my browser have to offer. When I went to record the first video I realized I had forgotten to install Camtasia in the VHD so I quickly downloaded a trial from www.techsmith.com. I got to work recording my video, editing it, and so on. Then I rendered the video. This can take a few minutes, but I don't complain because I know it's doing a lot of work. But I got a great surprise ... this latest version uses the taskbar progress bar overlay, so that I can put the rendering into the background and work on something else full screen while it renders. I can still see at a glance how it's doing, but I don't have to keep the little progress window on top. It's a really nice touch.

Then as serendipity would have it I spotted this video on Channel 9 that calls out this and other Windows 7 features in TechSmith products. It's only 9 minutes long, so go and watch it. And if you haven't added Windows 7 features to your client apps yet, why not? It really makes a difference.

Kate

© Copyright 2024 Kate Gregory

Theme design by Bryan Bell

newtelligence dasBlog 2.3.9074.18820   | Page rendered at Tuesday, 03 December 2024 11:52:28 (Eastern Standard Time, UTC-05:00)

|

On this page....

Pluralsight Free Trial

Search

Navigation

Categories

Blogroll

Sign In

|